About DVs

Contributions, Behind the Scenes

27 Sept 2024

Author: Viktor Redecker, Data Scientist

In August, the Obol Contributions Program was announced. Stake deployed on Obol DVs contribute 1% of staking rewards into the Obol Collective’s ’1% for Decentralisation’ retroactive fund (RAF). The challenge for the data team at DV Labs was to track those Contributions and provide an API which DV staking products could easily integrate.

In this article, data scientist Viktor Redecker explains the work that went into the Obol Contributions program, behind the scenes.

Tracking Contributions: Behind the Scenes

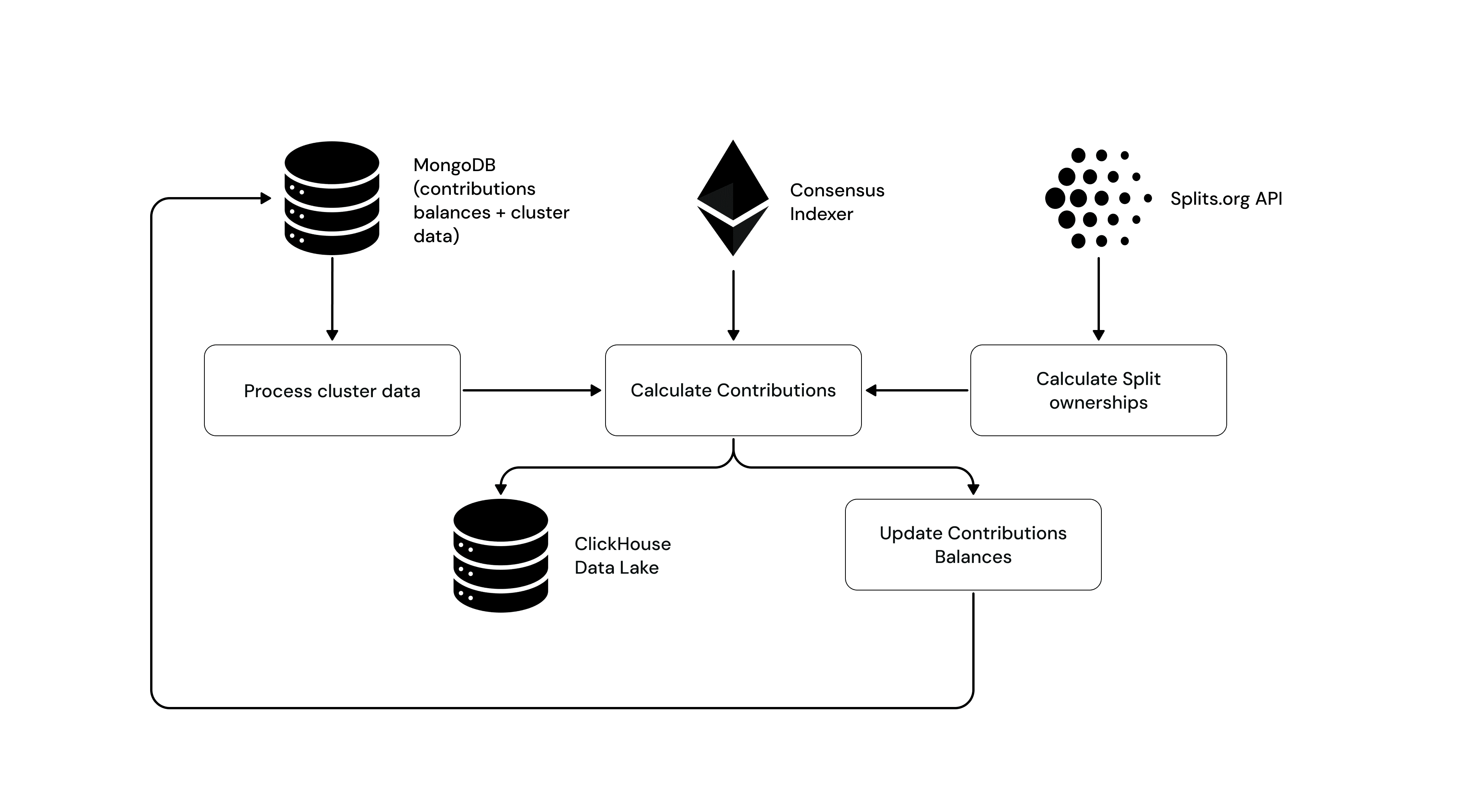

Our partners wanted to use our API to track their contributions to the retroactive fund. (RAF) Our challenge was to track how much each partners’ Distributed Validators contribute to the Obol RAF pool. For each DV cluster, a splitter contract is where the consensus rewards end up. Therefore, our first step is to gather all the splitter contracts with the Obol RAF address as a recipient, which we did by creating a custom query with the splits.org SDK. Once we collected the relevant splitter contract addresses, we match them to DV clusters using the fee recipient address. This allows us to find the associated DV cluster metadata such as the validator public keys for those clusters. For each validator public key, we read the consensus rewards data using an on-chain indexer called “GotEth”, built by Miga Labs. We divide the rewards across the split recipient addresses.

We then do a couple of checks for faulty data, duplicates, etc, before calculating the final percentage of the rewards that each address has contributed to the pool. Now that we’ve calculated the contributions, we save the data in two databases. This includes a versioned history in ClickHouse, which allows us to see contributions over time, balance on a certain day, etc. The current balances are saved to our MongoDB, which serves our production API.

We run this pipeline using Spark, once per day. Over time we hope to serve real time queries, which is why we opted to also use a Clickhouse database. This architecture opens the door to more advanced queries and endpoints in the future.

A Team Effort

For me, this was a cool project that taught me a lot about on-chain data, smart contracts, and how we use splits. As I’m still relatively new to the blockchain world, I learned a ton.

The project was definitely a team effort. I was mostly responsible for the data pipeline, which means determining which data we need to combine and how to do it. In other words, how to accurately track contributions. Two other colleagues did the API endpoint to query the contributions in Mongo, and the project planning. And of course, our product designer and frontend engineer worked to integrate contributions into the DV Launchpad dashboard.

A Basis for Future Collaboration

Now that the contributions API is up and running, and proven with multiple partner integrations, our work has shifted to maintaining the API and working together with partner teams to ensure a smooth integration into their staking products. We hope to see even more staking products integrate contributions, and are ready to answer any and all questions they might have.

If this is interesting to you, don’t hesitate to get in touch with me directly, or use our jobs site to apply for an open role or submit a general application. Thanks for reading, and keep an eye out for future developments of the Contributions program!